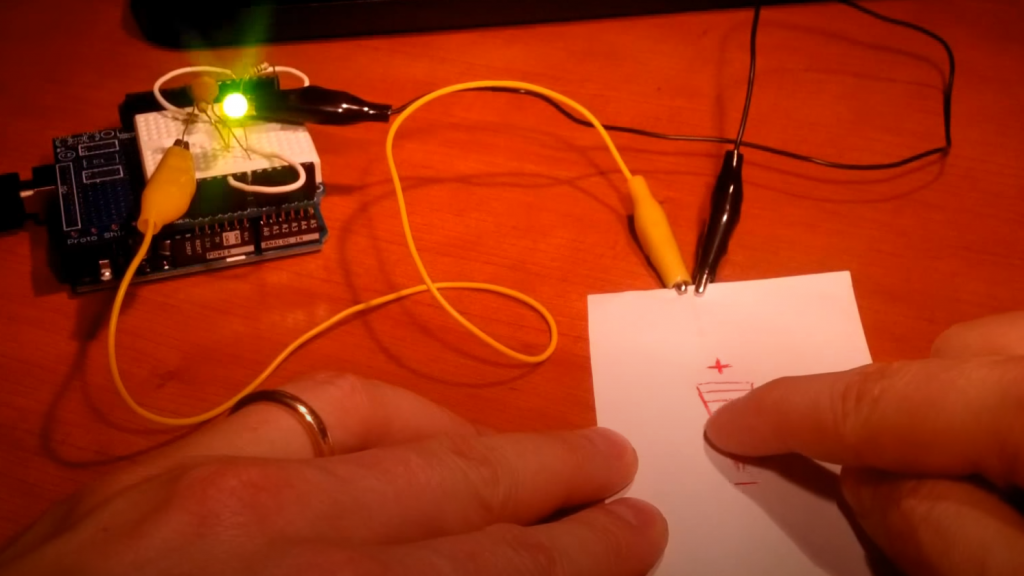

Conductive Ink Challenge

I was recently asked if I wanted to take part in a competition organised by Newark Canada and presented with VHS ( Big thanks to Tom); a challenge that focused on a very interesting and versatile product, conductive ink. I received a pen in the mail and started to investigate what I could do with it. The Conductive Ink Pen essentially allows …