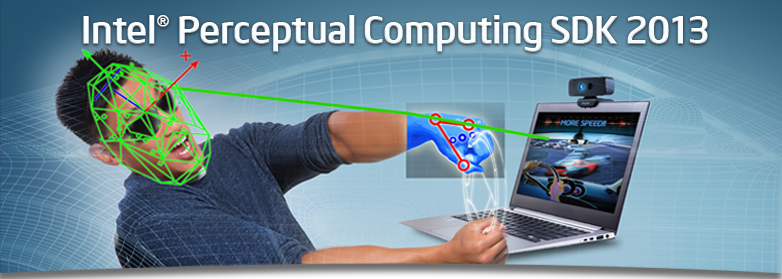

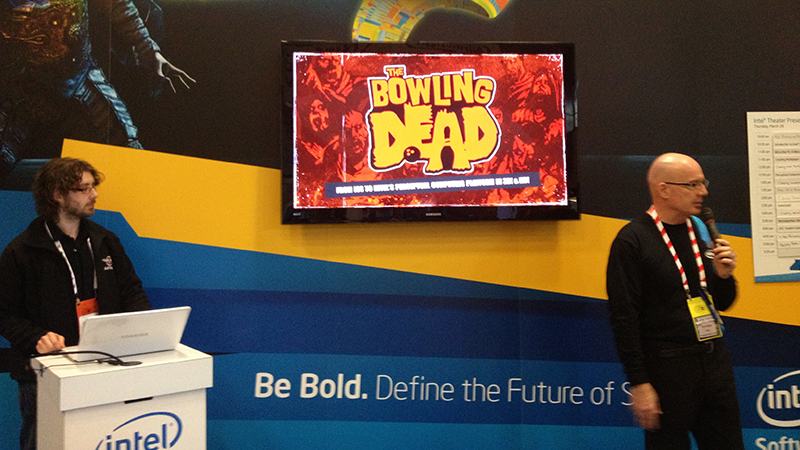

At GDC 2013 I spoke at the “Natural, Intuitive, and Immersive Gaming With Intel Perceptual Computing” and Intel Booth introducing the emerging field of Physical Technical Art, an area that focuses on human-computer, human-human interactions and physical workflows during game development and play. One of the main interaction devices that I spoke about in my talk was the Intel Perceptual Computing Camera. In this article I’m going to talk about my experiences working with this hardware and how I used the provided SDK to port an iOS game to use it’s perceptual interface.

What is Perceptual Computing

Traditionally when interacting with a computer you only had a number of basic input systems; keyboard, mouse / trackball, joystick or digitising tablet. Over the years this has exploded to include speech recognition, touch / multi-touch interfaces, eye and face tracking, motion/gesture tracking and even scratch detection to name a few.

Intel’s Perceptual computing initiative focuses on the research and development of software and hardware that encompasses speech recognition, face identification, finger and hand gesture tracking and augmented reality for consumers in turn improving the Natural User Interface or human-machine interface.

The Camera

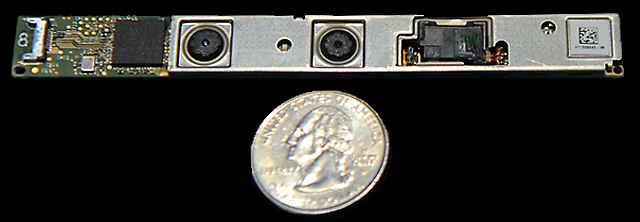

The Intel Perceptual Computing camera is actually a Creative Camera, a USB 2.0 HD webcam with dual-array microphones and a Depth sensor designed for close-range interactions. It doesn’t require any additional power as the Kinect does and it’s quite small as you can see in the first image. The camera does do some image and sound processing but most of the magic is in Intel’s SDK. It’s great for laptops and desktops, but not so accessible to lower power systems such as Raspberry Pi or Arduino.

How it works

The Creative Camera is built from a number of parts including an HD camera to capture the 24 bit color at 30fps as well as stereo microphones; typically what you would find in a standard HD Web Camera. The other camera is an 8 bit Infrared camera with an Infrared emitting light right next to it which can capture up to 60fps at 320×240 pixels.

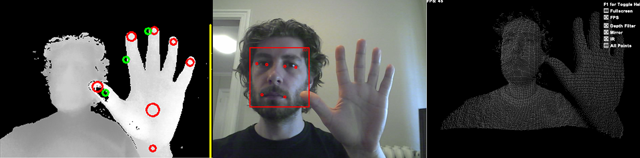

The HD color camera works as a normal camera would, although it has an infrared filter over the lens to stop any light produced by the IR Light flooding the color image. The infrared camera is a little different; it works in tandem with the light. This camera uses a concept called Time of Flight or ToF. This works using the known speed of light to measure distance which requires some precision electronics to time an IR flash to each pixel on the IR sensor. With this timing an image can be created with a gradient of distance as you can see in the grayscale image below.

Anomalies can be created in the image if the surface that you are illuminating doesn’t reflect the light correctly or not at all and is outside of its timing range. Glass is a great example of this, it is virtually invisible to the sensor and we can use this to our advantage; I’ll go into a little more “depth” later. The IR Light is similar to the light used in a remote control and as with a remote you can also see the IR light on this camera with a mobile phone camera or any other camera that doesn’t have an IR filter on it.

The dual microphones not only offer high quality audio capture at 48Khz, but it provide us with positional information that can be used to identify who is talking in the image or other gestural information such as clicking or tapping. The PCSDK also includes a speech recognition system for voice commands and dictation.

With the information collected via the color and infrared sensors the Intel PCSDK can algorithmically detect hand positions and gestures, detect faces and facial motion and produce 3d point clouds of the viewed scene, as you can see in the images below.

The PCSDK is a simple to use but very powerful API which takes all the hard work out of the computational and image manipulation required to track and detect these gestures. Querying the API for information about each finger, palm or gesture is easily accessible whether you are using it in a standalone app, incorporated into a presentation system such as Cinder, in a web app or in a game engine as I did with The Bowling Dead port.

Comparable Technologies

There are a number of similar hardware devices to the Intel Camera, each having their own strengths and weaknesses. I have included some of the major hardware devices that are currently popular and in use.

Kinect 1 & 2

|  |

The Kinect for PC has been a staple for hackers for a number of years now. There are many applications that have been developed ranging from full body motion capture systems to 3d scanners. The resolution of the image provided is quite low however and the hardware’s age is starting to tell. The Kinect 1 camera uses a slightly different technique for constructing its 3d scene, namely Structured Light. Essentially this system projects an IR pattern (structured light) over the scene and then by the distortion it can reconstruct it’s depth.

With the second generation of Kinect currently shipping with Xbox One, a PC version will soon be available. This new hardware has switched to use the ToF system with an HD sensor, which will greatly improve its depth quality and overall tracking. One of the major downsides to the Kinect is its form factor. The hardware is designed to be a tabletop device that require external power in addition to the USB connection and not as an ultra portable device. Access to the Kinect 2 API is still currently restricted but will be released soon.

Leap Motion

The Leap motion is constructed from 2 cameras with 3 IR LED’s and runs completely off USB. Measuring only 3” long this unit is extremely responsive and fits nicely in front of your keyboard and will soon be integrated within one. With it’s cameras pointing directly up it is able to capture fingertips and hand motion smoothly and at high frame rate. It is best suited for interaction with palms facing down towards the camera. The recent SDK 2.0 sees improvements to hand and finger tracking with better occluded finger tracking and hand and finger labeling.

Intel Camera

Overall the Intel PCSDK is the powerhouse behind this developer hardware. It provides a great deal of information about the subject with hand, face and gesture tracking along with its voice recognition software. It’s medium form factor enables it to be taken easily wherever you go and can be run completely off USB.

Now that the developer hardware has been out for a year, Intel has announced and demoed at CES 14 and IDF Shenzhen 2014 that within this year we will see a greatly miniaturized device embedded in laptops and tablets. This is going to have a phenomenal effect on how we interact with our hardware. I’ll certainly be looking forward to testing out the new incarnation of this device.

Experiments

Over the months leading up to GDC 13 I was able to test out a couple of features of the SDK. I was particularly interested in seeing how a touch based game would adapt to the Intel Camera.

The Bowling Dead – iOS to Perceptual Computing SDK conversion

The Bowling Dead is an iOS game that uses the touch swipe mechanism to bowl various bowling balls, some explosive, at the ever increasing zombie horde marching towards you in an alleyway. If the Zombie reaches you the game switches to a frantic melee and you are required to remove the zombie from you before it devours you.

Hand Tracking in Maya

Using the SDK I decided to test to see if hand tracking would work within Maya. It was relatively easy to get it all hooked up and operational. I used a combination of C# with Python.Net and it was surprisingly fast. This could be extended to allow the manipulation of other objects or brushes within the scene or used as a general motion capture interface.

Head Tracking in Maya (Perspective coupled Head Tracking)

In this video you can see perspective coupled head tracking. This system drives the camera in planar space and is based upon the movement of the user’s head, producing perspective parallax which is a component of depth perception. In addition to this technique the user could wear Anaglyphic glasses typically red or blue or polarized glasses adding stereo vision which is key to producing immersion.